Quick Start Guide

Welcome to Unoplat Code Confluence! This guide will help you quickly set up and start using our platform to enhance your codebase management and collaboration.

Table of Contents

- Introduction

- Prerequisites

- 1. Graph Database Setup

- 2. Generate Summary and Ingest Codebase

- 3. Setup Chat Interface

- Troubleshooting

Introduction

Unoplat Code Confluence empowers developers to effortlessly navigate and understand complex codebases. By leveraging a graph database and an intuitive chat interface, our platform enhances collaboration and accelerates onboarding.

Prerequisites

Before you begin, ensure you have the following installed on your system:

pipx install poetry

1. Graph Database Setup

Installation

- Run the Neo4j Container

docker run \

--name neo4j-container \

--restart always \

--publish 7474:7474 \

--publish 7687:7687 \

--env NEO4J_AUTH=neo4j/Ke7Rk7jB:Jn2Uz: \

--volume /Users/jayghiya/Documents/unoplat/neo4j-data:/data \

--volume /Users/jayghiya/Documents/unoplat/neo4j-plugins/:/plugins \

neo4j:5.23.0

2. Generate Summary and Ingest Codebase

Ingestion Configuration

{

"local_workspace_path": "/Users/jayghiya/Documents/unoplat/textgrad/textgrad",

"output_path": "/Users/jayghiya/Documents/unoplat",

"output_file_name": "unoplat_textgrad.md",

"codebase_name": "textgrad",

"programming_language": "python",

"repo": {

"download_url": "archguard/archguard",

"download_directory": "/Users/jayghiya/Documents/unoplat"

},

"api_tokens": {

"github_token": "Your github pat token"

},

"llm_provider_config": {

"openai": {

"api_key": "Your openai api key",

"model": "gpt-4o-mini",

"model_type": "chat",

"max_tokens": 512,

"temperature": 0.0

}

},

"logging_handlers": [

{

"sink": "~/Documents/unoplat/app.log",

"format": "<green>{time:YYYY-MM-DD at HH:mm:ss}</green> | <level>{level}</level> | <cyan>{name}</cyan>:<cyan>{function}</cyan>:<cyan>{line}</cyan> | <magenta>{thread.name}</magenta> - <level>{message}</level>",

"rotation": "10 MB",

"retention": "10 days",

"level": "DEBUG"

}

],

"parallisation": 3,

"sentence_transformer_model": "jinaai/jina-embeddings-v3",

"neo4j_uri": "bolt://localhost:7687",

"neo4j_username": "neo4j",

"neo4j_password": "Ke7Rk7jB:Jn2Uz:"

}

Note: As of now for

sentence_transformer_model, only Hugging Face sentence embedding models with dimensions up to 4096 are supported. Dimensions' upper limit is due to Neo4j vector index limitations. Make sure your chosen model meets these requirements.

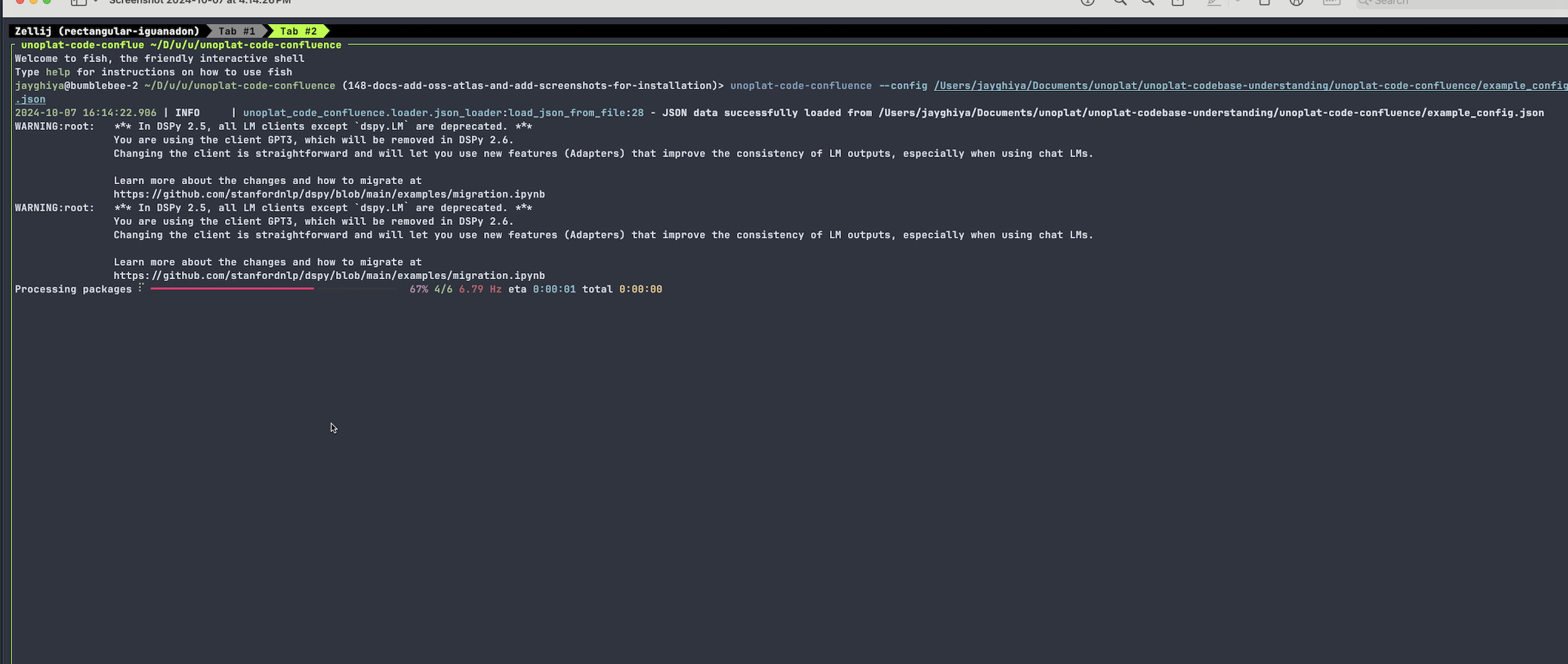

Run the Unoplat Code Confluence Ingestion Utility

- Installation

pipx install 'git+https://github.com/unoplat/unoplat-code-confluence.git@v0.14.0#subdirectory=unoplat-code-confluence'

- Run the Ingestion Utility

unoplat-code-confluence --config /path/to/your/config.json

- Example Run

After running the ingestion utility, you'll find the generated markdown file in the specified output directory. The file will contain a comprehensive summary of your codebase. Also the summary and other relevant metadata would be stored in the graph database.

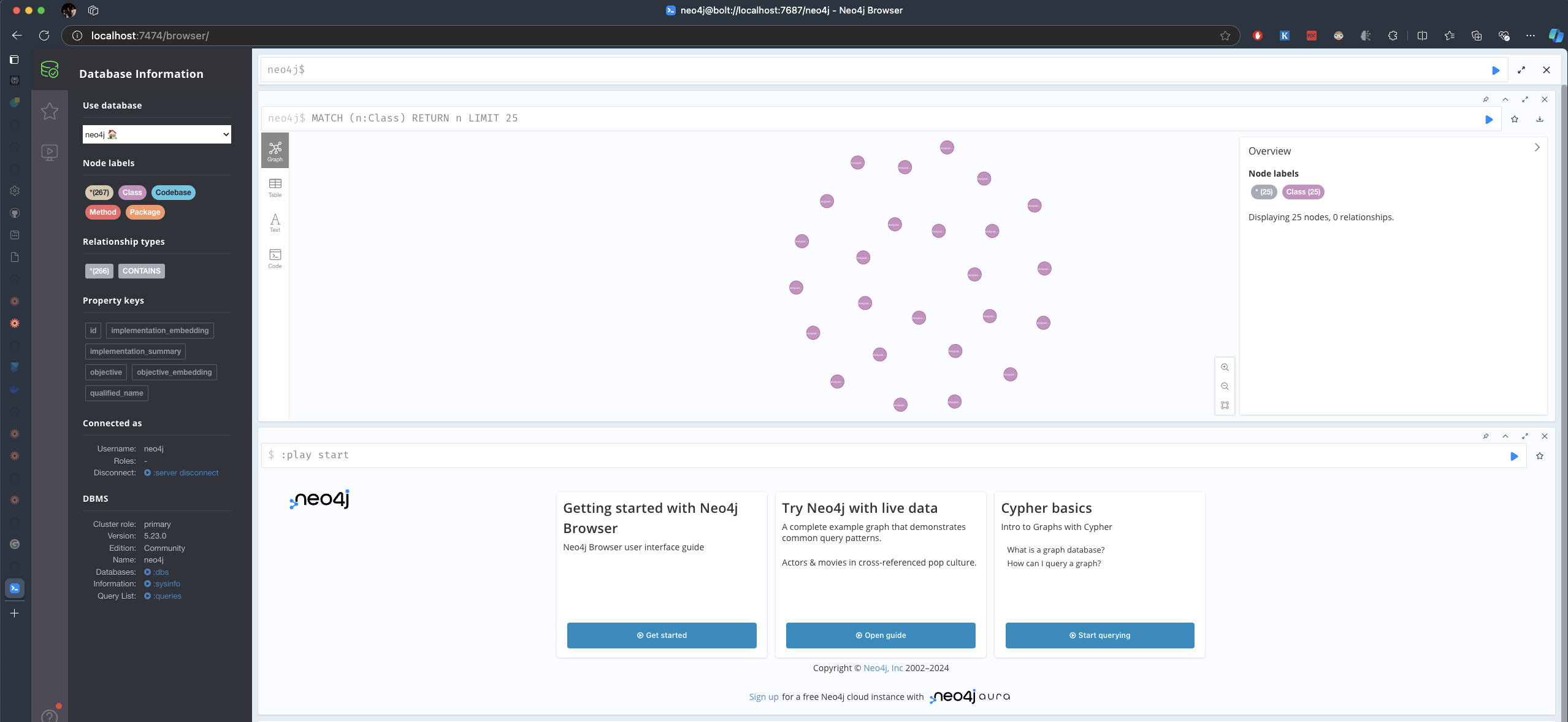

Also check out the Neo4j Browser to visualize the graph database. Go to http://localhost:7474/browser/

3. Setup Chat Interface

Query Engine Configuration

{

"sentence_transformer_model": "jinaai/jina-embeddings-v3",

"neo4j_uri": "bolt://localhost:7687",

"neo4j_username": "neo4j",

"neo4j_password": "your neo4j password",

"provider_model_dict": {

"model_provider": "openai/gpt-4o-mini",

"model_provider_args": {

"api_key": "your openai api key",

"max_tokens": 500,

"temperature": 0.0

}

}

}

Note: As of now for

sentence_transformer_model, only Hugging Face sentence embedding models with dimensions up to 4096 are supported. Dimensions' upper limit is due to Neo4j vector index limitations. Make sure your chosen model meets these requirements.

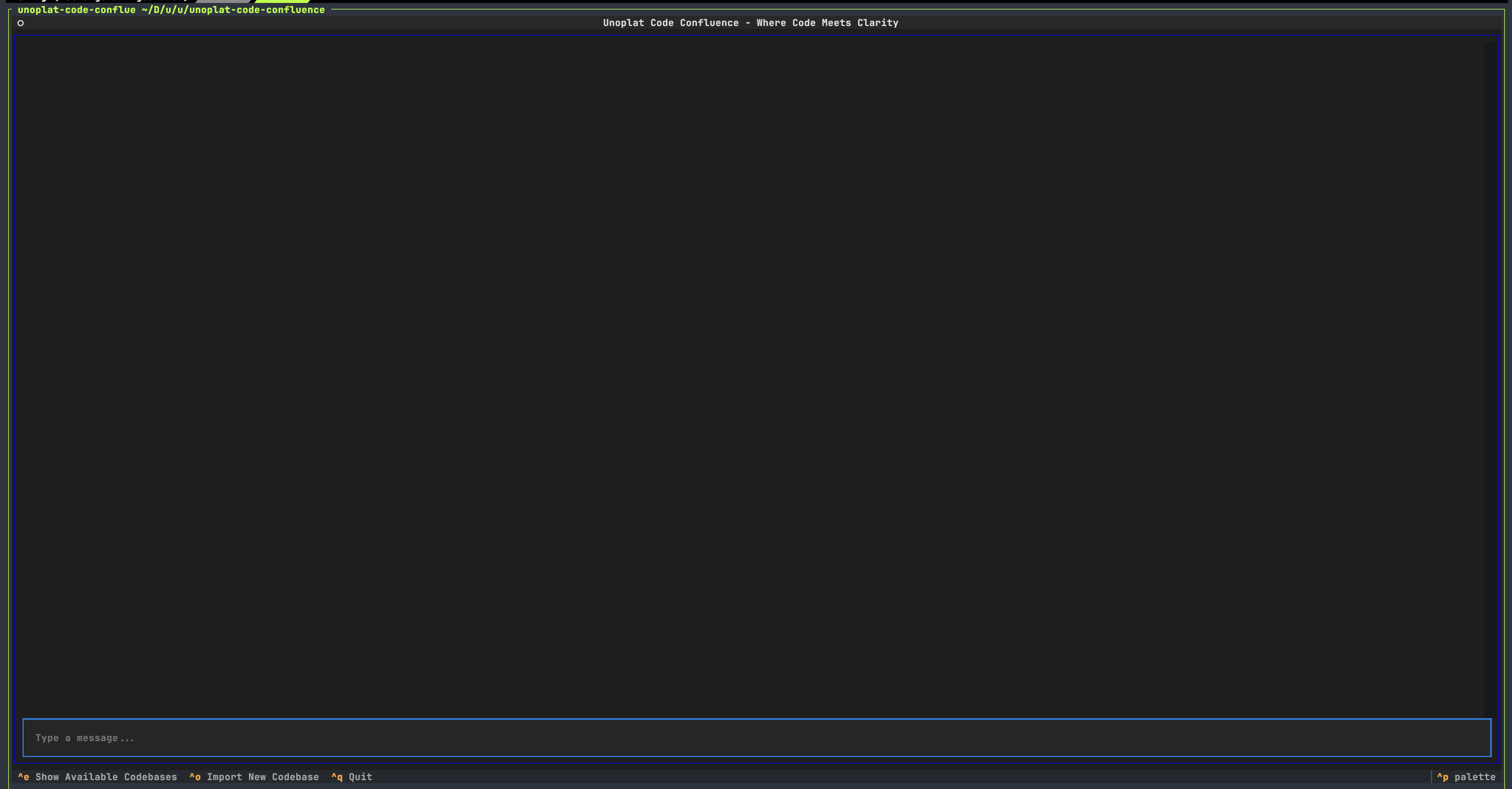

Launch Query Engine

- Installation

pipx install 'git+https://github.com/unoplat/unoplat-code-confluence.git@v0.5.0#subdirectory=unoplat-code-confluence-query-engine'

- Run the Query Engine

unoplat-code-confluence-query-engine --config /path/to/your/config.json

- Example Run

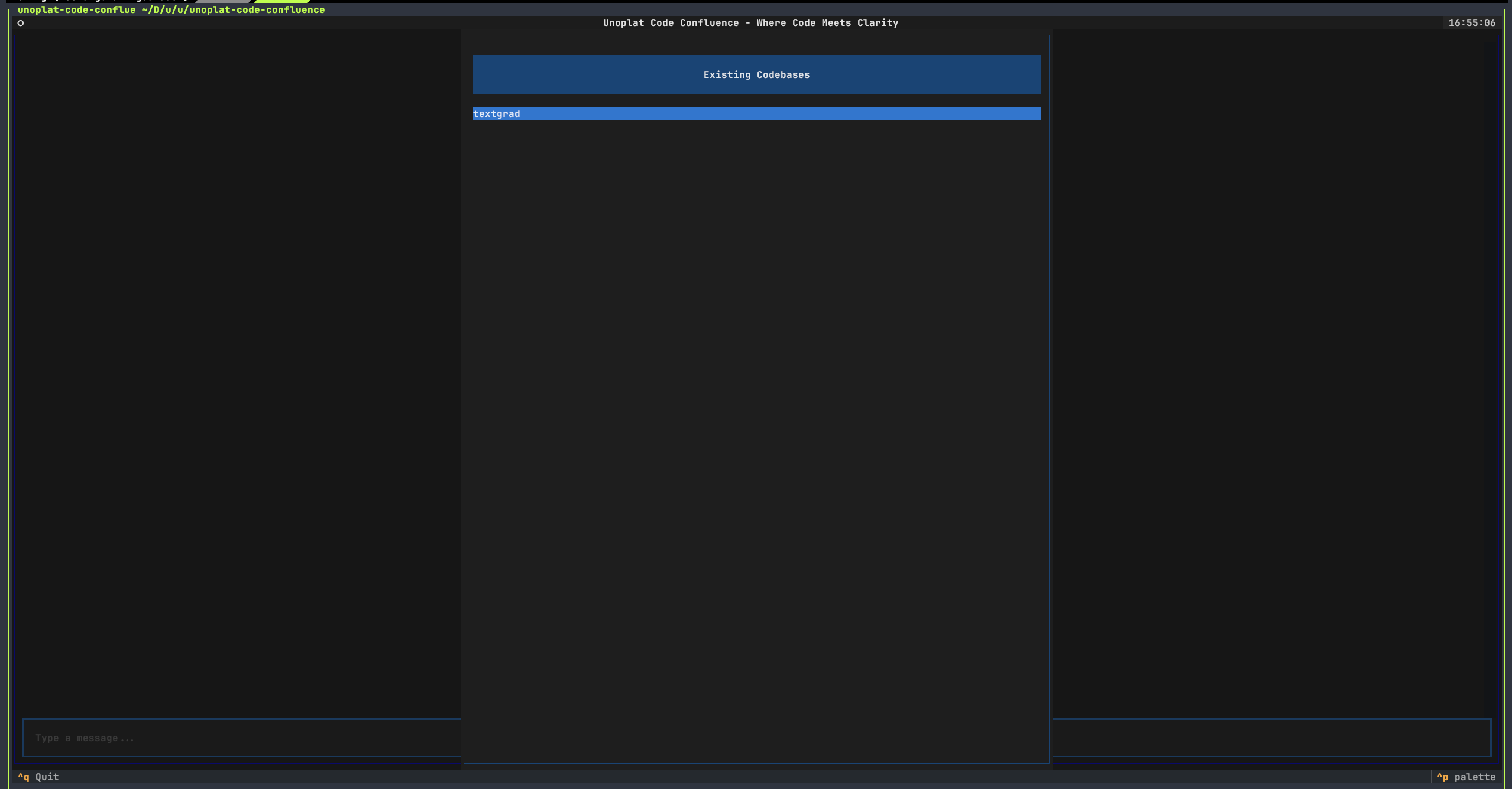

We had added textgrad to our graph database in the configuration of ingestion utility. You can now chat with the codebase. To view existing codebases press ctrl + e.